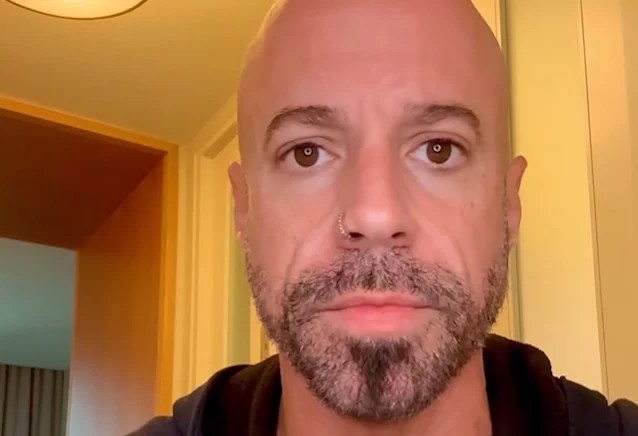

dq. CHRIS DAUGHTRY BLASTS A.I. FAKES: “It’s Wrong. It’s Unethical. It’s Irresponsible. And It’s Dangerous.”

Chris Daughtry has never been one to hold back when it comes to protecting the integrity of his music. But this time, the American Idol alum and chart-topping rock frontman isn’t talking about record labels or streaming algorithms. He’s taking aim at something far more modern — and far more unsettling.

Artificial intelligence.

In a candid moment that has since ignited debate across music circles and social platforms, Daughtry delivered a blunt assessment of A.I.-generated deepfakes and synthetic vocals circulating online.

“I think it’s wrong. I think it’s unethical. I think it’s irresponsible. And I think it’s dangerous,” he said, addressing the growing trend of fake songs and manipulated audio clips created to mimic real artists.

The comments come amid a surge of A.I.-generated content that can replicate voices with startling accuracy. From fake duets to fabricated “new releases” by artists who never stepped into a studio, the technology has blurred the line between innovation and imitation — and Daughtry is not convinced the industry is prepared for the fallout.

A Voice That Isn’t Theirs

For Daughtry, the issue goes beyond creative experimentation. It strikes at the heart of artistic identity.

“When someone can take your voice, your tone, your inflections — the things you’ve spent a lifetime shaping — and replicate them without your permission, that’s not flattery. That’s theft,” he reportedly explained in a recent discussion about the technology’s impact.

The emotional weight behind his words reflects a broader anxiety spreading across the entertainment world. Artists have begun discovering “new songs” attributed to them online — tracks they never recorded, lyrics they never approved, performances they never gave.

For fans, the illusion can be convincing. For creators, it can feel violating.

The Ethical Line

A.I. has rapidly evolved from a futuristic novelty to a mainstream tool capable of generating realistic vocals, visual deepfakes, and even simulated live performances. Supporters argue that it opens new creative doors, allowing experimentation across genres and collaborations that would otherwise be impossible.

But Daughtry sees a line being crossed.

“When there’s no consent, no transparency, and no accountability — that’s where it becomes dangerous,” he said.

His concerns echo those of many musicians who fear not only reputational damage but also financial consequences. A fake song that goes viral can siphon streams, distort public perception, and potentially misrepresent an artist’s values or message.

There’s also the risk of misinformation. A fabricated lyric attached to a real artist’s name can spread quickly — especially in an era where content moves faster than verification.

A Growing Industry Tension

The music industry itself appears divided. Some labels are exploring A.I. tools to enhance production workflows, while others are lobbying for stricter regulations to protect intellectual property.

Legal frameworks are still catching up. Questions surrounding voice ownership, digital likeness rights, and copyright boundaries remain murky in many jurisdictions. Lawmakers have begun discussing protections, but comprehensive legislation has yet to solidify.

Daughtry’s stance adds a high-profile voice to the cautionary side of the debate.

Industry analysts say artists speaking out publicly could influence how companies deploy A.I. moving forward.

“When established musicians push back, it sends a signal,” one music business consultant noted. “It shifts the conversation from ‘cool tech’ to ‘serious consequence.’”

The Human Element

Beyond legality, Daughtry’s argument centers on something more personal: authenticity.

Music, he insists, is rooted in lived experience — heartbreak, triumph, grief, resilience. A synthetic replication may mimic tone, but it cannot replicate the journey behind it.

“There’s something sacred about the human voice,” he suggested. “It carries memory. It carries emotion. It carries truth.”

Fans have largely rallied behind his comments, with many expressing discomfort over the idea of unknowingly consuming A.I.-fabricated songs. Others argue that technology has always reshaped art — from auto-tune to digital production — and that resistance may be inevitable but temporary.

Still, Daughtry remains firm in his conviction.

Where the Line Is Drawn

Importantly, he has not dismissed A.I. entirely. Like many artists, he acknowledges its potential for positive use — from studio experimentation to accessibility tools for independent creators.

But consent and transparency, he argues, must be non-negotiable.

“If it’s clearly labeled, if it’s collaborative, if everyone involved agrees — that’s one thing,” he explained. “But using someone’s identity without permission? That’s another.”

The debate surrounding A.I. in music is unlikely to slow down. As technology advances, so too will its ability to blur authenticity. For artists like Daughtry, the challenge isn’t innovation itself — it’s ensuring that innovation doesn’t erase the human core of creativity.

In a world where a voice can be cloned in seconds, his warning lands with particular weight.

Wrong. Unethical. Irresponsible. Dangerous.

Four words that capture a growing fear in the age of artificial intelligence — and a reminder that even as technology races forward, the fight to protect artistic truth is far from over.